iscsi盘未生成磁盘路径

在创建物理云主机时,会将物理云主机的磁盘通过iscsi协议挂载到主机,然后通过/dev/disk/by-path目录下找到该磁盘,后续进行数据的读写。同事反馈挂载到主机后,在/dev/disk/by-path下找不到相应的目录。

问题环境

宿主机系统(host):CentOS Linux release 7.7.1908 (Core)

iscsi相关软件版本:

scsi-target-utils-1.0.55-4.el7.x86_64

iscsi-initiator-utils-6.2.0.874-11.el7.x86_64

iscsi-initiator-utils-iscsiuio-6.2.0.874-11.el7.x86_64

iscsi客户端执行步骤

和通用的客户端发现并登陆iscsi磁盘并没有什么的区别:

-

发现node

iscsiadm -m discovery -t st -p 192.168.100.16:3260 -

登陆session

iscsiadm -m node -p 192.168.100.16:3260 -T iqn.testxqk.disk1 --login -

查看状态

iscsiadm -m node -S -

重新扫描

iscsiadm -m node -T iqn.testxqk.disk1 -R

iscsi盘的清理动作步骤为:

-

登出session

iscsiadm -m node -T iqn.testxqk.disk1 -p 192.168.100.16:3260 -u -

删除发现信息

iscsiadm -m node -T iqn.testxqk.disk1 -p 192.168.100.16:3260 -o delete -

删除相关的目录

rm -rf /var/lib/iscsi/send_targets/192.168.100.16*

定位分析

login之后正常场景来说就会在by-path下生成相应的设备,但是在这个环境上竟然找不到。

-

查看磁盘

lsblk sdj 8:176 0 5G 0 disk使用lsblk可以看到新增的磁盘,并尝试对磁盘进行分区、格式化都可以,不影响正常的使用。

-

查找实际路径

udevadm info -q path -n /dev/sdj /devices/pci0000:00/0000:00:01.0/0000:01:00.0/host0/target0:2:12/0:2:12:0/block/sdj使用udevadm 可以看到/dev/sdj的实际路径,根据实际路径可以查询iscsi盘的来源

-

查找iscsi盘来源

udevadm info -q env -p /devices/pci0000:00/0000:00:01.0/0000:01:00.0/host0/target0:2:12/0:2:12:0/block/sdj DEVLINKS=/dev/disk/by-id/scsi-36101b5442bcc70002272a4fb08474624 /dev/disk/by-id/wwn-0x6101b5442bcc70002272a4fb08474624 /dev/disk/by-path/pci-0000:01:00.0-scsi-0:2:12:0 DEVNAME=/dev/sdj DEVPATH=/devices/pci0000:00/0000:00:01.0/0000:01:00.0/host0/target0:2:12/0:2:12:0/block/sdj DEVTYPE=disk MAJOR=8 MINOR=144 MPATH_SBIN_PATH=/sbin SUBSYSTEM=block从结果来看,返回的额外信息中并不带有任何的iscsi盘信息。正常的场景下返回的信息应该是:

udevadm info -q env -p /devices/pci0000:00/0000:00:01.0/0000:01:00.0/host0/target0:2:12/0:2:12:0/block/sdj DEVLINKS=/dev/disk/by-id/scsi-36101b5442bcc70002272a4fb08474624 /dev/disk/by-id/wwn-0x6101b5442bcc70002272a4fb08474624 /dev/disk/by-path/pci-0000:01:00.0-scsi-0:2:12:0 DEVNAME=/dev/sdj DEVPATH=/devices/pci0000:00/0000:00:01.0/0000:01:00.0/host0/target0:2:12/0:2:12:0/block/sdj DEVTYPE=disk DM_MULTIPATH_TIMESTAMP=1621931951 ID_BUS=scsi ID_MODEL=LSI ID_MODEL_ENC=LSI\x20\x20\x20\x20\x20\x20\x20\x20\x20\x20\x20\x20\x20 ID_PART_TABLE_TYPE=gpt ID_PATH=pci-0000:01:00.0-scsi-0:2:12:0 ID_PATH_TAG=pci-0000_01_00_0-scsi-0_2_12_0 ID_REVISION=4.27 ID_SCSI=1 ID_SCSI_SERIAL=0024464708fba472220070cc2b44b501 ID_SERIAL=36101b5442bcc70002272a4fb08474624 ID_SERIAL_SHORT=6101b5442bcc70002272a4fb08474624 ID_TYPE=disk ID_VENDOR=LSI ID_VENDOR_ENC=LSI\x20\x20\x20\x20\x20 ID_WWN=0x6101b5442bcc7000 ID_WWN_VENDOR_EXTENSION=0x2272a4fb08474624 ID_WWN_WITH_EXTENSION=0x6101b5442bcc70002272a4fb08474624 MAJOR=8 MINOR=144 MPATH_SBIN_PATH=/sbin SUBSYSTEM=block TAGS=:systemd: USEC_INITIALIZED=26970

是不是在发现设备时,系统创建磁盘过程中步骤报错?我们可以借助工具udevadm查看设备发现过程。

udevadm monitor [options] 监听内核事件和udev发送的events事件。使用方式如下:

udevadm monitor --property 输出事件的属性

udevadm monitor --kernel --property --subsystem-match=usb 过滤监听符合条件的事件

--kernel 输出内核事件

--udev 输出udev规则执行时的udev事件

--property 输出事件的属性

--subsystem-match=string 通过子系统或者设备类型过滤事件。只有匹配了子系统值的udev设备事件通过。

--tag-match=string 通过属性过滤事件,只有匹配了标签的udev事件通过。

先执行iscsi磁盘的清理动作,然后再新启动命令行窗口执行监听

udevadm monitor --property

然后重新登陆iscsi的session,查看事件列表,对比正常状态的下的事件列表可以发现:

在出问题的节点:

KERNEL[275969.968192] add /devices/platform/host14 (scsi)

ACTION=add

DEVPATH=/devices/platform/host14

DEVTYPE=scsi_host

SEQNUM=7186

SUBSYSTEM=scsi

KERNEL[275969.968261] add /devices/platform/host14/scsi_host/host14 (scsi_host)

ACTION=add

DEVPATH=/devices/platform/host14/scsi_host/host14

SEQNUM=7187

SUBSYSTEM=scsi_host

KERNEL[275969.968356] add /devices/platform/host14/iscsi_host/host14 (iscsi_host)

ACTION=add

DEVPATH=/devices/platform/host14/iscsi_host/host14

SEQNUM=7188

SUBSYSTEM=iscsi_host

KERNEL[275969.968474] add /devices/platform/host14/session4/iscsi_session/session4 (iscsi_session)

ACTION=add

DEVPATH=/devices/platform/host14/session4/iscsi_session/session4

SEQNUM=7189

SUBSYSTEM=iscsi_session

KERNEL[275969.986054] add /devices/platform/host14/session4/connection4:0/iscsi_connection/connection4:0 (iscsi_connection)

ACTION=add

DEVPATH=/devices/platform/host14/session4/connection4:0/iscsi_connection/connection4:0

SEQNUM=7190

SUBSYSTEM=iscsi_connection

...

而在正常的节点:

KERNEL[1204900.422800] add /devices/platform/host12 (scsi)

ACTION=add

DEVPATH=/devices/platform/host12

DEVTYPE=scsi_host

SEQNUM=8147

SUBSYSTEM=scsi

KERNEL[1204900.422879] add /devices/platform/host12/scsi_host/host12 (scsi_host)

ACTION=add

DEVPATH=/devices/platform/host12/scsi_host/host12

SEQNUM=8148

SUBSYSTEM=scsi_host

KERNEL[1204900.422934] add /devices/platform/host12/iscsi_host/host12 (iscsi_host)

ACTION=add

DEVPATH=/devices/platform/host12/iscsi_host/host12

SEQNUM=8149

SUBSYSTEM=iscsi_host

KERNEL[1204900.422977] add /devices/platform/host12/session2/iscsi_session/session2 (iscsi_session)

ACTION=add

DEVPATH=/devices/platform/host12/session2/iscsi_session/session2

SEQNUM=8150

SUBSYSTEM=iscsi_session

UDEV [1204900.423946] add /devices/platform/host12 (scsi)

ACTION=add

DEVPATH=/devices/platform/host12

DEVTYPE=scsi_host

SEQNUM=8147

SUBSYSTEM=scsi

USEC_INITIALIZED=4900422822

UDEV [1204900.424248] add /devices/platform/host12/scsi_host/host12 (scsi_host)

ACTION=add

DEVPATH=/devices/platform/host12/scsi_host/host12

SEQNUM=8148

SUBSYSTEM=scsi_host

USEC_INITIALIZED=423120

UDEV [1204900.424688] add /devices/platform/host12/iscsi_host/host12 (iscsi_host)

ACTION=add

DEVPATH=/devices/platform/host12/iscsi_host/host12

SEQNUM=8149

SUBSYSTEM=iscsi_host

USEC_INITIALIZED=4900423145

UDEV [1204900.424834] add /devices/platform/host12/session2/iscsi_session/session2 (iscsi_session)

ACTION=add

DEVPATH=/devices/platform/host12/session2/iscsi_session/session2

SEQNUM=8150

SUBSYSTEM=iscsi_session

USEC_INITIALIZED=4900423160

KERNEL[1204900.426760] add /devices/platform/host12/session2/connection2:0/iscsi_connection/connection2:0 (iscsi_connection)

ACTION=add

DEVPATH=/devices/platform/host12/session2/connection2:0/iscsi_connection/connection2:0

SEQNUM=8151

SUBSYSTEM=iscsi_connection

...

在正常节点下,会执行UDEV服务来重命名设备,而在出问题的节点上只是在内核中进行了注册,而没有再通过udev服务重命名磁盘设备,难道是服务出了问题?查看udev服务是否正常运行:

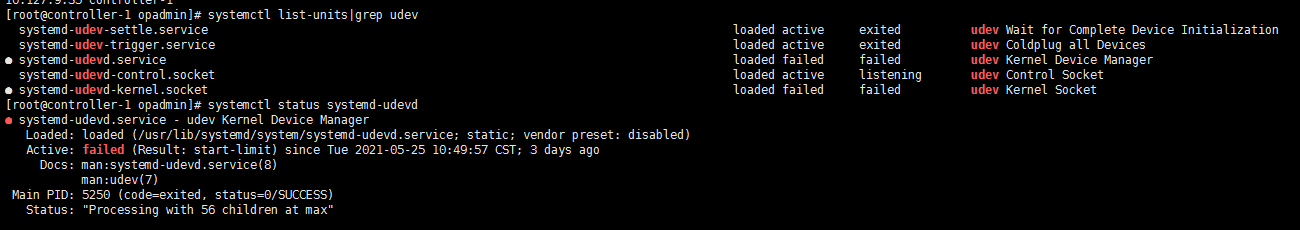

可以看到此时的systemd-udevd状态异常。

重新启动systemd-udevd,并查看状态是否正常。

systemctl restart systemd-udevd

然后清理iscsi盘,再次验证挂载,在/dev/disk/by-path可以看到相应的设备,问题的原因得到确认。

systemd-udevd状态异常

查看启动的message日志,定位下udev服务的原因

May 19 09:48:25 controller-1 systemd: Starting Notify NFS peers of a restart...

May 19 09:48:26 controller-1 systemd: Stopping udev Kernel Device Manager...

May 19 09:48:26 controller-1 sm-notify[5265]: Version 1.3.0 starting

May 19 09:48:26 controller-1 systemd: Stopped udev Kernel Device Manager.

May 19 09:48:26 controller-1 systemd: Started Notify NFS peers of a rest

May 19 09:48:26 controller-1 systemd: Stopped udev Kernel Device Manager.

May 19 09:48:26 controller-1 systemd: Started Notify NFS peers of a restart.

May 19 09:48:26 controller-1 systemd: start request repeated too quickly for systemd-udevd.service

May 19 09:48:26 controller-1 systemd: Failed to start udev Kernel Device Manager.

May 19 09:48:26 controller-1 rc.local: Job for systemd-udevd.service failed because start of the service was attempted too often. See "systemctl status systemd-udevd.service" and "journalctl -xe" for details.

May 19 09:48:26 controller-1 rc.local: To force a start use "systemctl reset-failed systemd-udevd.service" followed by "systemctl start systemd-udevd.service" again.

May 19 09:48:26 controller-1 systemd: Unit systemd-udevd.service entered failed state.

May 19 09:48:26 controller-1 systemd: systemd-udevd.service failed.

May 19 09:48:26 controller-1 systemd: rc-local.service: control process exited, code=exited status=1

May 19 09:48:26 controller-1 systemd: Failed to start /etc/rc.d/rc.local Compatibility.

May 19 09:48:26 controller-1 systemd: Unit rc-local.service entered failed state.

May 19 09:48:26 controller-1 systemd: rc-local.service failed.

May 19 09:48:26 controller-1 systemd: start request repeated too quickly for systemd-udevd.service

May 19 09:48:26 controller-1 systemd: Failed to start udev Kernel Device Manager.

May 19 09:48:26 controller-1 systemd: Unit systemd-udevd-kernel.socket entered failed state.

May 19 09:48:26 controller-1 systemd: systemd-udevd.service failed.

每次都是莫名其妙被重启,最终system认为systemd-udevd.service启动太频繁而不再拉起。

仔细查看还有rc-local.service,并且失败的原因是/etc/rc.d/rc.local这个脚本执行失败,查看脚本内容:

cat /etc/rc.d/rc.local

#!/bin/bash

# THIS FILE IS ADDED FOR COMPATIBILITY PURPOSES

#

# It is highly advisable to create own systemd services or udev rules

# to run scripts during boot instead of using this file.

#

# In contrast to previous versions due to parallel execution during boot

# this script will NOT be run after all other services.

#

# Please note that you must run 'chmod +x /etc/rc.d/rc.local' to ensure

# that this script will be executed during boot.

touch /var/lock/subsys/local

modprobe br_netfilter

systemctl restart systemd-udevd.service

systemctl restart systemd-udevd.service

systemctl restart systemd-udevd.service

systemctl restart systemd-udevd.service

systemctl restart systemd-udevd.service

systemctl restart systemd-udevd.service

可以看到在个脚本中有多次重启systemd-udevd的动作,所以导致系统在启动时重启过于频繁,系统误以为systemd-udevd有问题并置为失败状态。

继续排查部署环境的脚本,发现有添加此配置的脚本,在代码书写时没有考虑到异常场景,在特殊场景下会导致重复添加restart脚本到rc.local中,因此导致了此问题,整个排查过程到此结束。

参考文档